- The INRIA Holidays dataset for evaluation of image search

- The INRIA Copydays dataset for evaluation of copy detection

- The BIGANN evaluation dataset for evaluation of Approximate nearest neighbors search algorithms

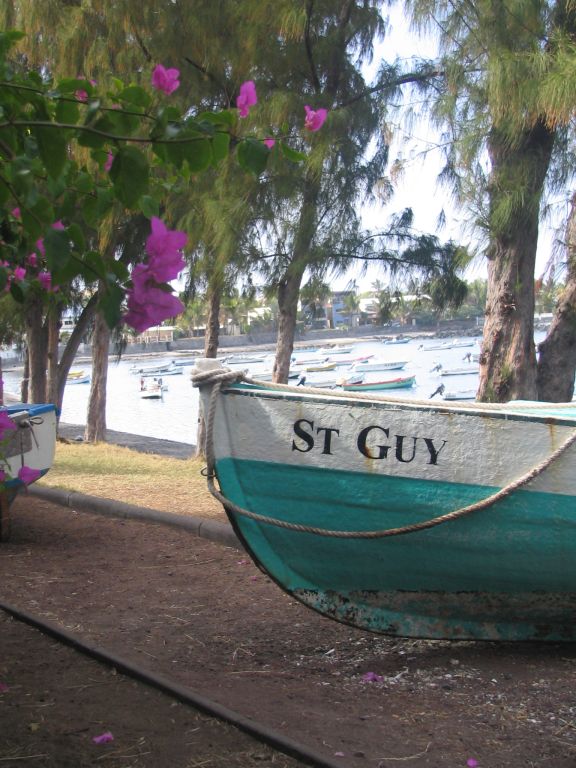

INRIA Holidays dataset

Sample images below

Copyright Notice

INRIA is the copyright holder of all the images included in the dataset.If you use this dataset, please cite the following paper:

Herve Jegou, Matthijs Douze and Cordelia Schmid

"Hamming Embedding and Weak geometry consistency for large scale image search"

Proceedings of the 10th European conference on Computer vision, October, 2008

This data set is provided "as is" and without any express or implied warranties, including, without limitation, the implied warranties of merchantability and fitness for a particular purpose.

Dataset description

The Holidays dataset is a set of images which mainly contains some of our personal holidays photos. The remaining ones were taken on purpose to test the robustness to various attacks: rotations, viewpoint and illumination changes, blurring, etc. The dataset includes a very large variety of scene types (natural, man-made, water and fire effects, etc) and images are in high resolution. The dataset contains 500 image groups, each of which represents a distinct scene or object. The first image of each group is the query image and the correct retrieval results are the other images of the group.The dataset can be downloaded from this page, see details below. The material given includes:

- the images themselves

- the set of descriptors extracted from these images (see details below)

- a set of descriptors produced, with the same extractor and descriptor, for a distinct dataset (Flickr60K).

- two sets of clusters used to quantize the descriptors. These have been obtained from Flickr60K.

- some pre-processed feature files for one million images, that we have used in our ECCV paper to perform the evaluation on a large scale.

Download and Statistics

Dataset size: 1491 images in total: 500 queries and 991 corresponding relevant imagesNumber of queries: 500 (one per group)

Number of descriptors produced: 4455091 SIFT descriptors of dimensionality 128

Download images: jpg1.tar.gz and jpg2.tar.gz (1.1GB and 1.6 GB)

Download descriptors (526MB)

Download descriptors of Flickr60K (independent dataset, 636MB)

Download visual dictionaries (100, 200, 500, 1K, 2K, 5K, 10K, 20K, 50K, 100K and 200K visual words, 144MB) learned on Flickr60K

Download pre-computed features for one million images, stored in 1000 archives of 1000 feature files each (235GB in total).

Two binary file formats are used.

.siftgeo format

descriptors are stored in raw together with the region information provided by the software of Krystian Mikolajczyk. There is no header (use the file length to find the number of descriptors).

A descriptor takes 168 bytes (floats and ints take 4 bytes, and are stored in little endian):

| field | field type | description |

| x | float | horizontal position of the interest point |

| y | float | vertical position of the interest point |

| scale | float | scale of the interest region |

| angle | float | angle of the interest region |

| mi11 | float | affine matrix component |

| mi12 | float | affine matrix component |

| mi21 | float | affine matrix component |

| mi22 | float | affine matrix component |

| cornerness | float | saliency of the interest point |

| desdim | int | dimension of the descriptors |

| component | byte*desdim | the descriptor vector (dd components) |

A matlab file to read .siftgeo files.

.fvecs format

This one is used to store centroids. As for the .siftgeo format, there is no header. Centroids are stored in raw. Each centroid takes 516 bytes, as shown below.

| field | field type | description |

| desdim | int | descriptor dimension |

| components | float*desdim | the centroids components |

A matlab file to read .fvecs files

Descriptor extraction

Before computing descriptors, we have resized the images to a maximum of 786432 pixels and performed a slight intensity normalization.For the descriptor extraction, we have used a modified version of the software of Krystian Mikolajczyk (thank you Krystian!).

We have used the Hessian-Affine extractor and the SIFT descriptor. Note however that our version of the code may be different from the one which is currently on the web. If so, this should not noticeably impact the results.

The set of commands used to extract the descriptors was the following. Note that we have used the default values for descriptor generation.

infile=xxxx.jpg

tmpfile=${infile/jpg/pgm}

outfile=${infile/jpg/siftgeo}

# Rescaling and intensity normalization

djpeg $infile | ppmtopgm |

pnmnorm -bpercent=0.01 -wpercent=0.01 -maxexpand=400 |

pamscale -pixels $[1024*768] > $tmpfile

# Compute descriptors

compute_descriptors

-i $tmpfile -o4 $outfile -hesaff -sift

The output format option -o4 produces a binary .siftgeo file, which format is described above.

The other available formats are described

here.

NEW VERSION: the new version of the descriptor (pre-compiled). It is almost the same as the one above, but it includes dense sampling as well. Also, it does not depend on ImageMagick anymore, for improved portability. As a result, input JPG format is no longer supported. For the same set of parameters, there might be some small differences between the output of this version of the previous one, but there differences are mainly precision ones and the output of the two softwares are intended to be compatible.

Evaluation protocol

We have used to following protocol to evaluate our image search system.- download the EVALUATION PACKAGE.

- the performance is measured by the mean average precision averaged over all 500 queries. Our evaluation package is inspired from the software of Oxford.

- the query images are not counted as true positives when computing the mAP (of course, not as false positive). They are assumed to be "junk" images, as defined in the evaluation software of Oxford.

- we have learned all our parameters on a distinct dataset (clustering, etc) to better reflect the accuracy of a real system (where the relevant images are a small fraction of the overall image dataset)

Some papers reporting some results on Holidays

Matthijs Douze has collected a few results achieved on INRIA Holidays, mostly collected in Major computer vision conferences (This list is not exhaustive):Results on Holidays.

Copydays dataset

Copyright Notice

INRIA is the copyright holder of all the images included in the dataset.This data set is provided "as is" and without any express or implied warranties, including, without limitation, the implied warranties of merchantability and fitness for a particular purpose.

Dataset description

The Copydays dataset is a set of images which is exclusively composed of our personal holidays photos. Each image has suffered three kinds of artificial attacks: JPEG, cropping and "strong". The motivation is to evaluate the behavior of indexing algorithms for most common image copies (for video, you may be interest in our video copy generation software). Because of its small size, it is evaluated by merging the original images in a large image dabase.The dataset can be downloaded from this page, see details below. The material given includes the images themselves. On request we may also provide the set of descriptors extracted from these images. For the evaluation, one should ideally use the same sets of distractor images downloaded from Flickr than we used. We can provide them on request.

Download and Statistics

Download images: Original images (208MB)Cropped images, from 10% to 80% of the image surface removed, 2.0GB)

Scale+JPEG attacked images (77MB), scale: 1/16 (pixels), JPEG quality factors: 75, 50, 30, 20, 15, 10, 8, 5, 3

229 Strongly attacked images (60MB), print and scan, blur, paint,...

You may be interested in downloading some material provided for the Holidays dataset: the visual vocabularies, the set of features extracted from an independent dataset, some pre-processed features for one million images, the software used to compute the features, the extraction procedure and, finally, the evaluation protocol.