Daily Action Localization in Youtube videos

DALY is intended for research in action recognition. It is suitable to train and test temporal/spatial localization algorithms.

It contains high quality temporal and spatial annotations for 10 actions in

31 hours of videos (3.3M frames), which is an order of magnitude larger

than standard action localization datasets.

The dataset was developed by Philippe Weinzaepfel, Xavier Martin and Cordelia Schmid.

It is published by THOTH, a research team part of Inria.

If you use this dataset, please cite the following paper:

@article{weinzaepfel2016human,

title={Human Action Localization with Sparse Spatial Supervision},

author={Weinzaepfel, Philippe and Martin, Xavier and Schmid, Cordelia},

journal={arXiv preprint arXiv:1605.05197},

year={2016}

}

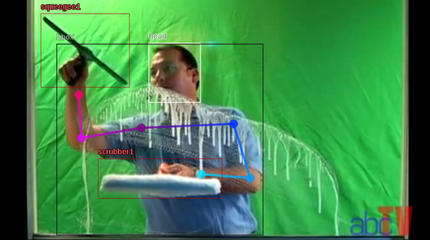

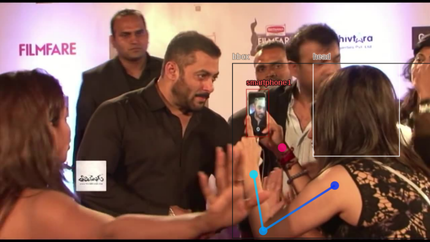

Below are some example frames taken from the dataset.

|

|

|

|

As you can see, DALY contains the following spatial annotations:

- bounding box around the action

- upper body pose annotation, including a bounding box around the head

- bounding box around object(s) involved in the action

There are special flags provided for instances that are outside the field of view, that are mirror reflections, and more.