Action Movie Franchises

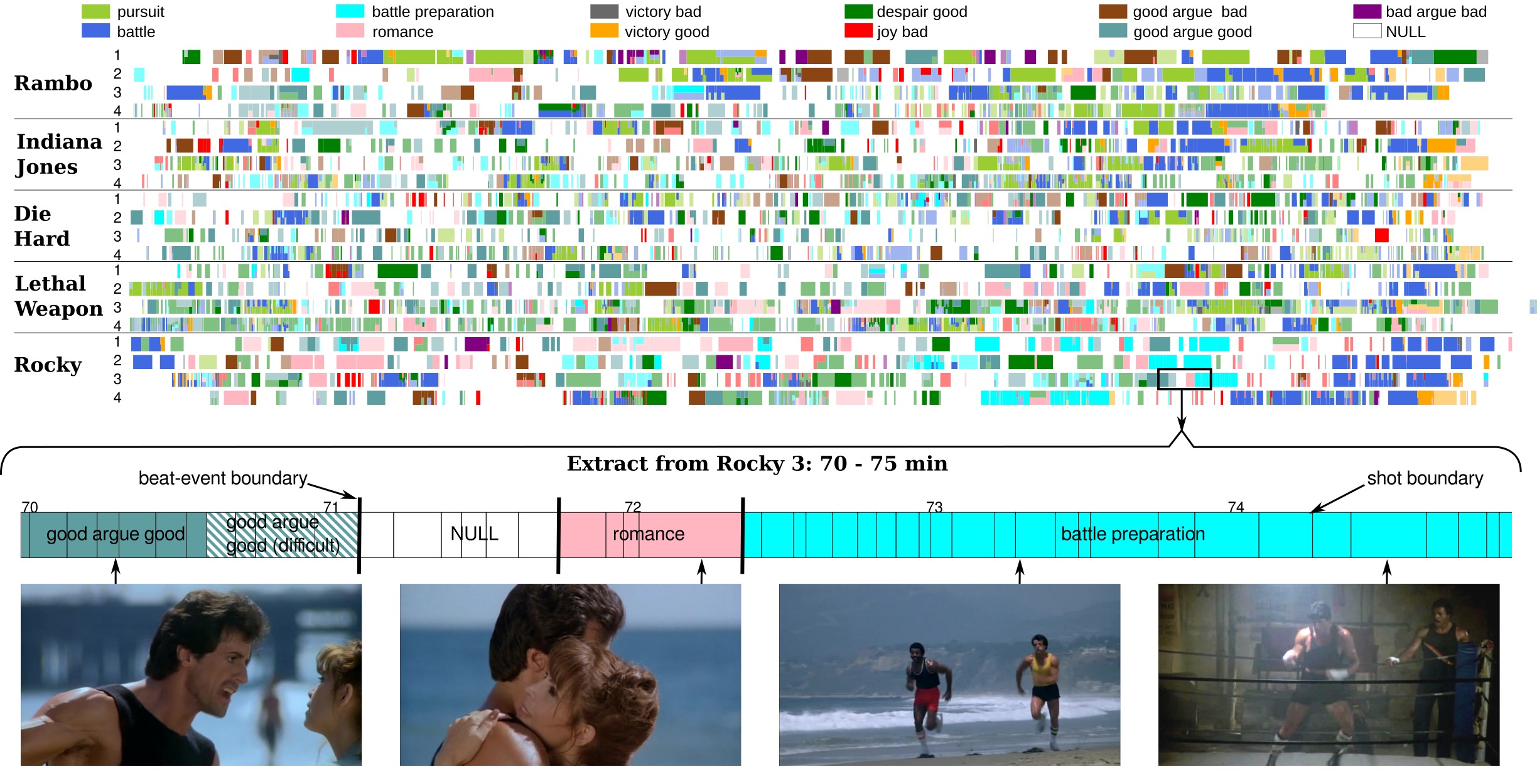

The Action Movie Franchises dataset consists of 20 Hollywood action movies belonging to 5 famous franchises: Rambo, Rocky, Die Hard, Lethal Weapon, Indiana Jones. Each franchise comprises 4 movies.

Each movie is decomposed into a list of shots, extracted with a shot boundary detector. Each shot is tagged with zero, one or several labels corresponding to the 11 beat-categories (the label NULL is assigned to shots with zero labels):

Click to view videos

Paper

Beat-Event Detection in Action Movie Franchises. D.Potapov, M.Douze, J.Revaud, Z.Harchaoui, C.Schmid. arXiv, 2015 Pdf , Supplementary materialDownload

[Annotation, cross-validation folds][Shot classification scores]

Evaluation code: [classification], [localization]

Descriptors (in fvecs format):

Dataset description

Series of shots with the same category label are grouped together in beat-events if they all depict the same scene (ie. same characters, same location, same action, etc.). Temporally, we also allow a beat-event to bridge gaps of a few unrelated shots. Beat-events belong to a single, non-NULL, beat-category.The set of categories was inspired by the taxonomy of [Snyder, 2005], and motivated by the presence of common narrative structures and beats in action movies. Indeed, category definitions strongly rely on a split of the characters into "good" and "bad" tags, which is typical in such movies. Each category thus involves a fixed combination of heroes and villains: both "good" and "bad" characters are present during battle and pursuit, but only "good" heroes are present in the case of good argue good.

Large intra-class variation is due to a number of factors: duration, intensity of action, objects and actors, and different scene locations, camera viewpoint, filming style. For ambiguous cases we used the "difficult" tag.

Annotation protocol

Each movie is first temporally segmented into a sequence of shots using a shot boundary detector. Shot boundaries correspond to transitions between different cameras and/or scene locations. The minimal temporal unit in the annotation process is a shot, so temporal boundaries of beat-events always coincide with shot boundaries. Shots can be annotated with zero, one, or several category labels. Shots without an annotation are assigned a NULL label. All occurrences are annotated, so NULL shots are negative instances for each of the 11 beat-event categories.

The annotation process was carried out in two passes. First, we have manually annotated each shot with zero, one or several of the 11 beat-category labels. Then, we have annotated the beat-events by specifying their category, beginning and ending shots. We tolerated gaps of 1-2 unrelated shots for sufficiently consistent beat-events. Indeed, movies are often edited into sequences of interleaved shots from two events, e.g. between the main storyline and the "B" story.

[Video examples of beat-events]Annotation statistics

The dataset provides 2 levels of annotation: "shot-level" and "event-level".| Duration (h=hour, s=second) | |||||||

| annotation unit | # positive train units | average unit | annotation | NULL | coverage | ||

| Shot-level | shot | 16864 | 5.4s | 25h29 | 15h42 | 57.1% | |

| Event-level | beat-event | 2906 | 35.7s | 28h49 | 14h08 | 61.4% | |

Global view on the dataset annotations (click for detailed view):

Localization results

Legend: green - correct detection, red - false alarm, gray - ignored, bottom line - ground truth.

Classification results

Shot categories are shown in different colors, hashes show difficult cases.