I am now a Research Scientist at Xerox: see my new webpage at XRCE.

I am a former PhD student and Post-Doc of the LEAR team, in the LJK laboratory and INRIA Rhône-Alpes research center. My doctoral school was the Grenoble INP institute of technology. I was funded by the Microsoft Research-INRIA joint center. I mainly work in the fields of computer vision and machine learning, with a keen interest in automatic video content interpretation.

I come from the ENSIMAG engineering school of IT and applied Mathematics. I also have a Master's degree in Machine Learning from the Université Joseph Fourier (UJF) of Grenoble.

I successfully graduated on October 25th, 2012 :-)

On the 1st of October 2008, I started my PhD thesis under the supervision of the head of the LEAR team,

Cordelia Schmid,

and Zaid Harchaoui.

The title of my PhD subject is:

This PhD thesis (click here for my manuscript and presentation) addresses visual event and action recognition in natural videos, a problem which receives increasing attention due to the widespread availability of personal video capture devices, low cost of multimedia storage, and ease of content sharing. The goal is to develop video representations and recognition techniques that can be applied to a wide range of visual events in real-world multimedia data such as movies and internet videos. In particular, I investigate how an action can be decomposed, what is its discriminative structure, and how to use this information to accurately represent video content.

The main challenge I address lies in how to build models of actions that are simultaneously information-rich --- in order to correctly differentiate between different action categories --- and robust to the large variations in actors, actions, and videos present in real-world data.

| The goal of this work is to find when a short human action (like opening a door) is performed in large real-world video databases. We describe an action as a sequence of atomic action units, termed actoms, which are semantically meaningful temporal parts that are characteristic for the action. We learn both the content and the temporal structure of actions. The results are published at the CVPR 2011 conference in the paper Actom Sequence Models for Efficient Action Detection. |

|

| More details are available on the project page. |

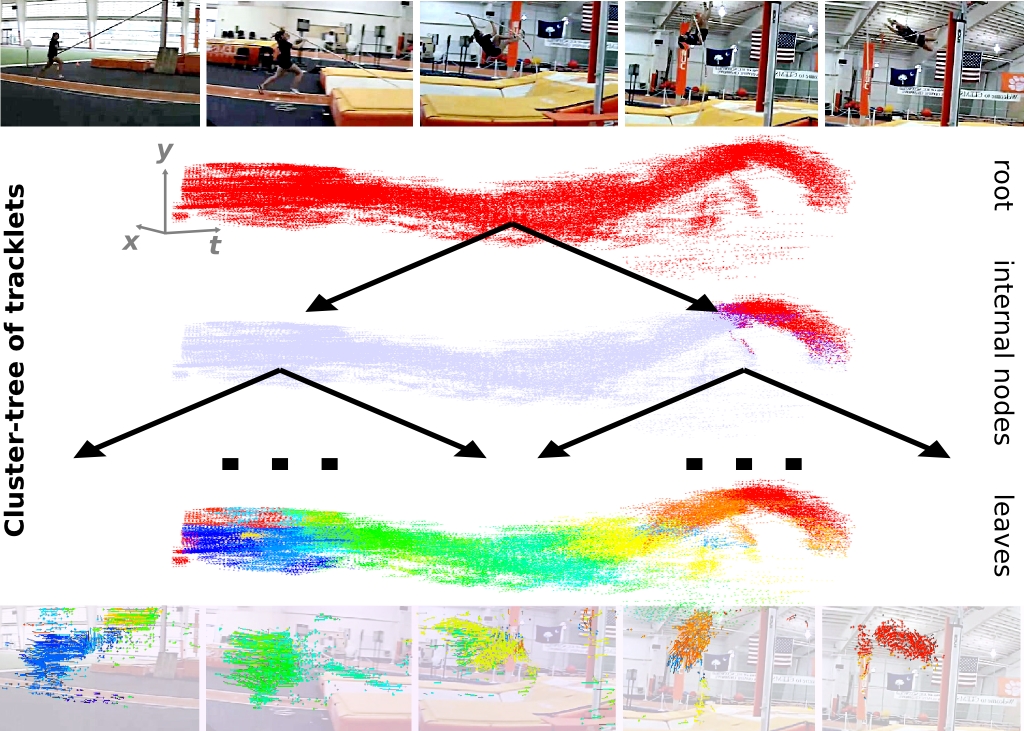

| Although simple actions can be represented as sequences of short temporal parts, longer activities, e.g. pole vaulting, are composed of a variable number of sub-events connected by complex spatio-temporal relations. In this project, we learn how to automatically represent activities as a hierarchy of mid-level motion components. This hierarchy is a data-driven decomposition that is specific to each video. The results are published at the BMVC 2012 conference in the paper Recognizing activities with cluster-trees of tracklets A one page summary is also available here. |

|

| Some minimalistic slides and videos are available here, with more coming soon... |

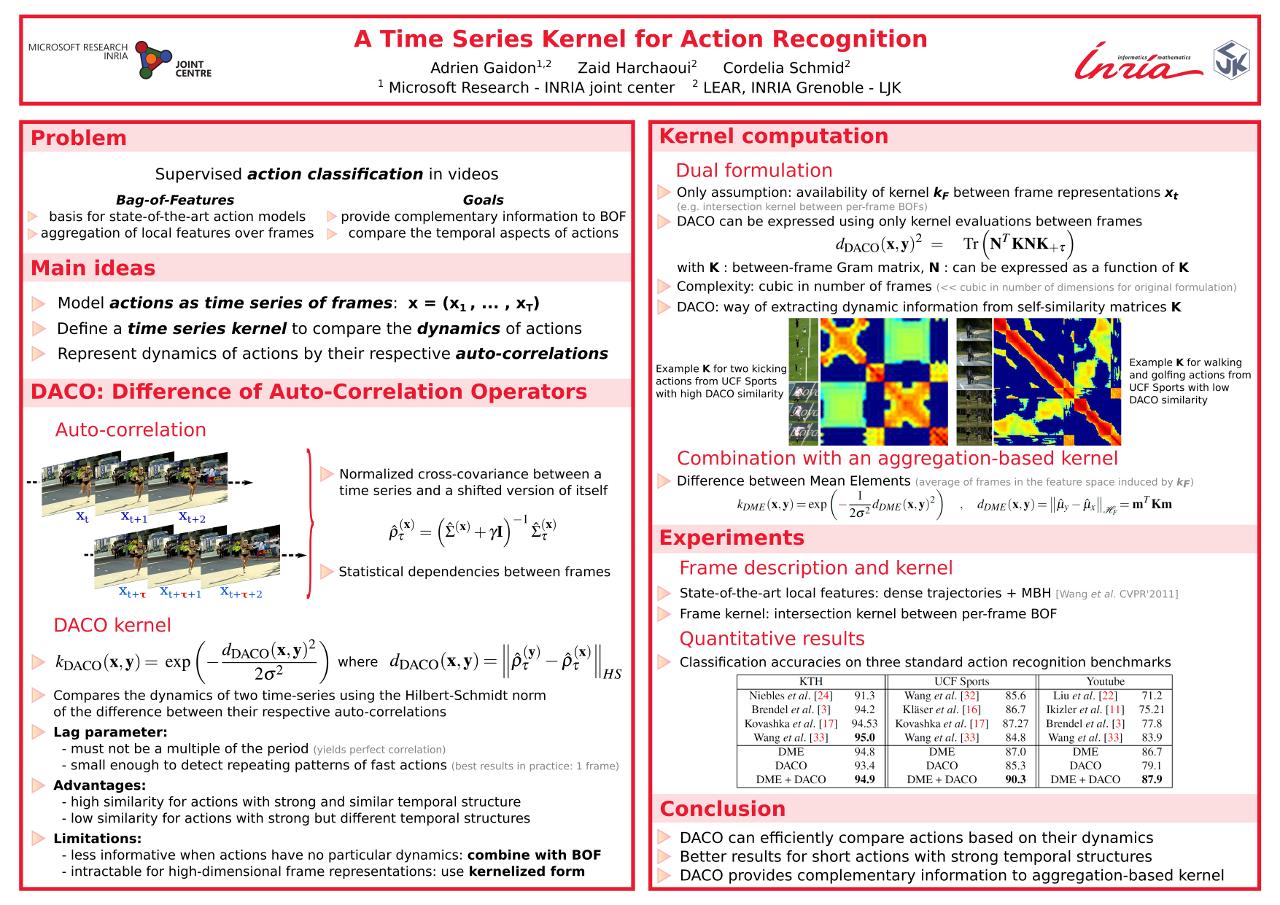

| In this work, we address the problem of action recognition by describing actions as time series, and introduce a kernel to compare their dynamical aspects. We propose a new kernel specific to videos, which compares the temporal dependencies of actions. Our time series kernel (called DACO, for Difference of Auto-Correlation Operators) is shown to provide complementary information to standard orderless approaches like bag-of-features. The results are published at the BMVC 2011 conference in the paper A time series kernel for action recognition A one page summary is also available here. |

|

| More details coming soon... |

| During this project, we aimed at using textual and visual information to extract some video examples of a query action from large movie databases. The paper Mining visual actions from movies published at the BMVC 2009 conference, presents our approach and gives some results on the TV-show Buffy the vampire slayer. |

|

| More details are available on the project page. |

I participated in two challenges during the 2008 summer period:

Between February and June 2008, I did my Master's internship in the LEAR team with Marcin Marszalek and Cordelia Schmid. My work was about combining text and video for action recognition in videos.