|

I am a Ph.D. specializing in robotics, computer vision, and natural language processing. Over the last four years, I pioneered robot learning research in the team and developed methods to enable robots to learn visually- and language-guided behaviors from data. In the five publications at top-tier conferences, I showed advantages of my work over classical control algorithms. I also developed a method to transfer complex skills learned in simulation to real-world UR5 robots. I am now excited about the next challenge of applying my research knowledge to real-world products. Email / Google Scholar / LinkedIn / Github / CV |

|

|

My research interests include robotics, reinforcement and imitation learning, simulation-to-reality transfer, natural language processing, and vision-and-language navigation. During the Ph.D. studies, I was adviced by Cordelia Schmid and co-adviced by Ivan Laptev. I worked with Chen Sun during my internship at Google Research. |

|

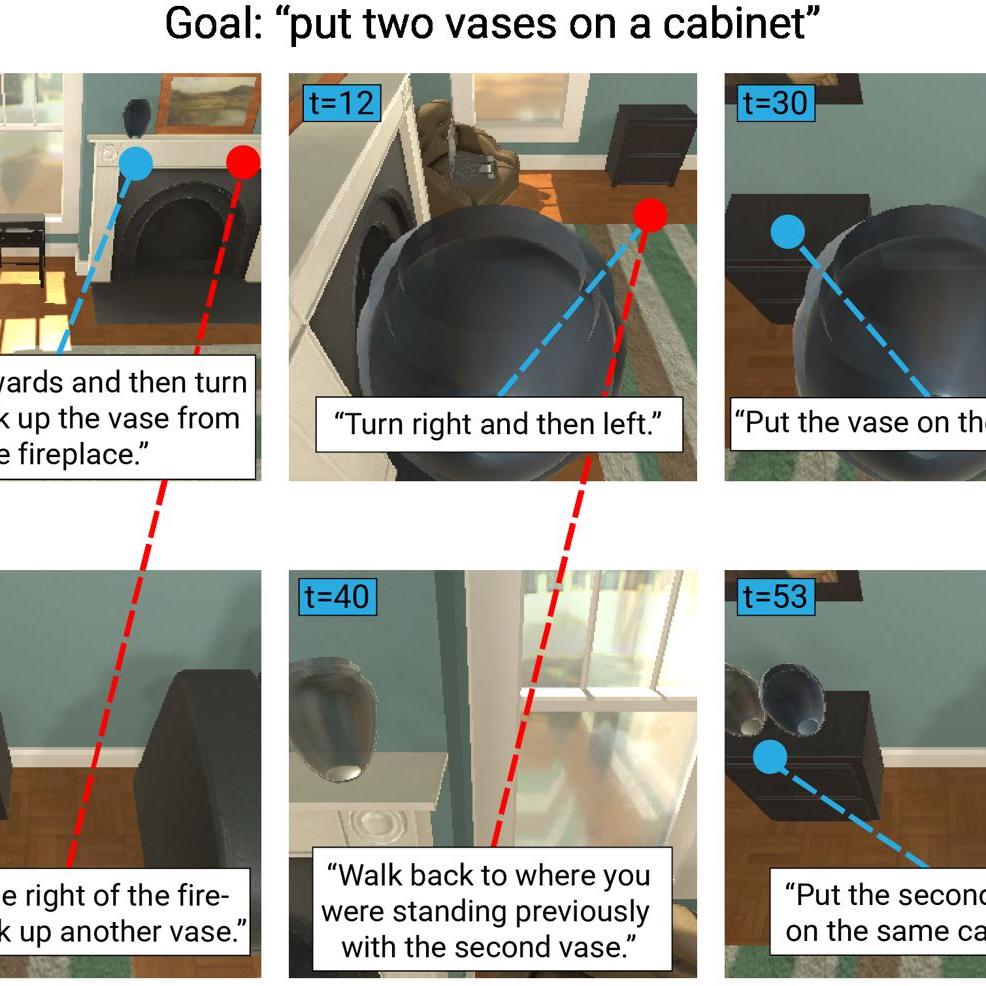

Alexander Pashevich, Cordelia Schmid, Chen Sun ICCV, 2021 bibtex / code / website A multimodal transformer-based architecture for vision-and-language navigation (VLN) improving results on a challenging task by 74%. |

|

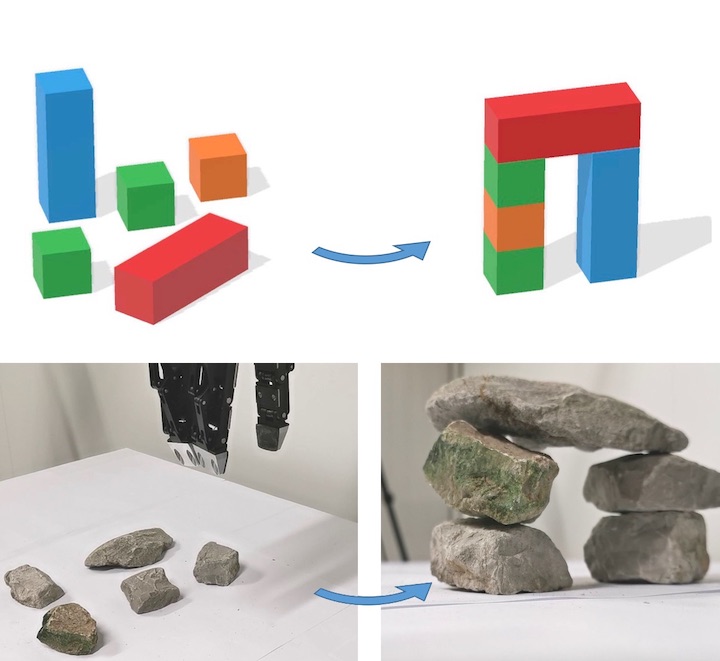

Alexander Pashevich*, Igor Kalevatykh*, Ivan Laptev, Cordelia Schmid IROS, 2020 bibtex / website / video An approach learning to build shapes by disassembly that combines learning in low-dimensional state space and high-dimensional observation space. |

|

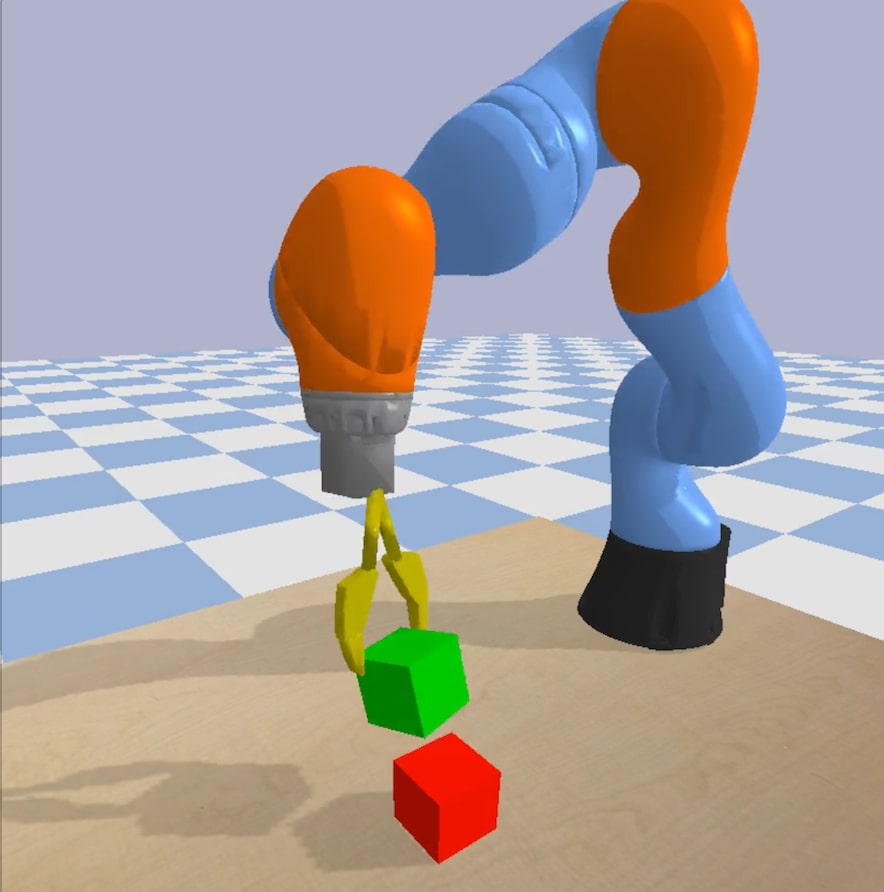

Robin Strudel*, Alexander Pashevich*, Igor Kalevatykh, Ivan Laptev, Josef Sivic, Cordelia Schmid ICRA, 2020 bibtex / code / website / video A reinforcement learning approach to task planning that learns to combine primitive skills learned from demonstrations. |

|

Alexander Pashevich*, Robin Strudel*, Igor Kalevatykh, Ivan Laptev, Cordelia Schmid IROS, 2019 bibtex / code / website / video An approach for transferring policies learned in simulation to real-world robots by exploiting parallel computations. |

|

Alexander Pashevich, Danijar Hafner, James Davidson, Rahul Sukthankar, Cordelia Schmid NeurIPS Deep RL Workshop, 2018 bibtex / poster A hierarchical reinforcement learning approach for learning from sparse rewards. |

|

Richard Marriott, Alexander Pashevich, Radu Horaud Pattern Recognition Letters, 2018 bibtex An algorithm for unsupervised extraction of piecewise planar models from depth data using constrained Gaussian mixture models. |

|

|